Exponential Sludge

"Artificial intelligence" is more of the same old story of technology for profit.

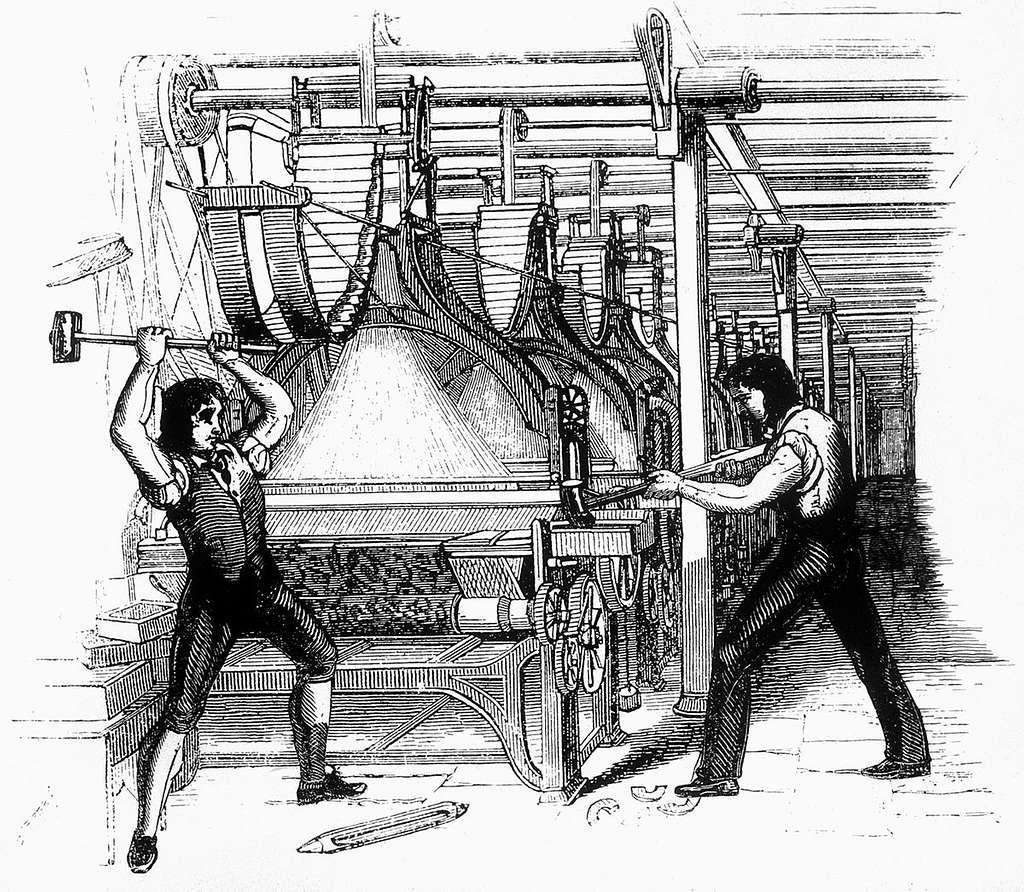

The Luddites were a group of 19th century English textile workers who famously smashed machines with hammers. Hence, in common parlance, Luddite is a term with a negative connotation referring to someone who stubbornly rejects modern technology, in particular or broad ways. But there has been a growing movement to positive reframe the Luddites in recent years, with scholars and activists challenging the popular understanding of these misunderstood workers. The Luddites were not actually technophobic per se, they were rebelling against deteriorating conditions and valuation of their labor. And they were met with severe state repression for it, including execution.

Luddism is particularly relevant today when it comes to so-called AI, a topic that has seen a recent resurgence of interest, funding, and propaganda. Software like OpenAI’s ChatGPT and Google’s Bard supposedly will evolve to be either the cause of or solution to all of humanity’s problems. But these programs are, as writer Evgeny Morozov put it, “neither artificial nor intelligent.” This generative “AI" is based on large language models that predict what words should come next and thereby produce humanesque text; they are not, and will never be, self-aware. Such models are trained on mountains of human-produced work and require increasingly copious energy, water, raw materials, and labor to build and generate the computers they run on. And these programs also require a “vast underclass” of poorly paid, traumatized, and exploited workers to label and sort vast quantities of data.

ChatGPT is good at generating content, but what is the actual utility of that? The way Silicon Valley guys talk about “AI'' reveals stunning levels of spiritual hollowness and self-interested vacuousness. The idea of ubiquitous “AI” “art” is a nightmare only a capitalist could conjure, and it reflects a deep misunderstanding of both the purpose of art and the limitations of the technology. Such programs, even if dramatically improved, could only ever offer digital high fructose corn syrup because there is no creative expression; a computer program has nothing to say and no truths to reveal.

Signal president and AI scholar Meredith Whittaker has noted that “AI” is really a marketing term rather than an accurate description of the technologies in question. Venture capitalists have a vested interest in selling us on both extreme promise and peril to attract funding, sell products, charm regulators, and discipline labor. The specter of AI is similar to hiring consultants in that it diffuses responsibility and provides a reverse justification for bosses to do what they wanted to do in the first place. Actually existing “AI” cannot write quality articles or scripts; whatever text it generates would need to be reshaped by a human hand in order for it to be even semi-useful. But this provides the perfect excuse to pay your workers less to be AI content editors rather than staff writers.

The idea of producing monetizable media without all those pesky labor costs is obviously enticing to CEOs and investors, perhaps best exemplified in the ongoing Writers Guild of America (WGA) and Screen Actors Guild (SAG) strikes. The topic of “AI” has loomed large over this labor dispute, after what was thought to be a minor bargaining item turned into a major flashpoint. The studios are aiming to scan and record actors’ likenesses and voices for unlimited digital reproduction and utilize generative “AI” in the script production process, all just to pay workers less so the shareholders and executives can theoretically make even more money.

Relatedly, the main place that we do see technological skepticism represented in the mainstream—especially around AI—is in stories created by these very same writers: Black Mirror, 2001: A Space Odyssey, Ex Machina, The Matrix, and many more. When people discuss fears about the AI they almost always reference SkyNet from the Terminator series. And in the universe of Dune, there are no computers because of the Butlerian Jihad, a movement that destroyed all “thinking machines” thousands of years before the events of the story: Thou shalt not make a machine in the likeness of a human mind. The function of computers was replaced by highly specialized and augmented humans. The Butlerian Jihad went so far as to destroy calculators.

I have no beef with calculators, but seeing the trajectory we are on, I can understand getting carried away. Generative “AI” is allowing people to churn out fraudulent and dangerously misinformative books and further exacerbating existing axes of oppression. It writes horrible articles. And if these “AI” programs start training on the sludge they produce, it could turn into a feedback loop doom spiral of compounding defects—a model can only ever be as good as its inputs.

The internet, which holds the promise of universally connecting all of humanity and allowing us to access all of our collective knowledge, seems like it is getting worse. Algorithmically generated garbage used to game the search algorithm has already drastically reduced the functionality of Google. Nowadays, when you type something into a search engine, one of the top suggestions is almost always your query with “Reddit” added to it, evidence of fellow web surfers trying to be directed towards useful information that actually comes from humans (Google is well aware of this). Elon Musk’s Twitter continues to deteriorate in its owner’s image, and a mere 18 months after Facebook changed its name to Meta in an ostensible demonstration of its commitment to the transparently ridiculous idea of the metaverse, Mark Zuckerberg has apparently already pivoted to “AI”, the next desperate hope for growth.

Zuckerberg and Musk joined a closed summit about on the topic at the US Senate last week alongside some other obscenely rich AI evangelists (the total wealth represented was approximately $550 billion) and a few actual experts. The contradictions were apparently on full display:

Mr. Musk, who has called for a moratorium on the development of some A.I. systems even as he has pushed forward with his own A.I. initiatives, was among the most vocal about the risks. He painted an existential crisis posed by the technology.

“If someone takes us out as a civilization, all bets are off,” he said, according to a person who was in the room. Mr. Musk said he had told the Chinese authorities, “If you have exceptionally smart A.I., the Communist Party will no longer be in charge of China.”

Deborah Raji, a researcher at the University of California, Berkeley, responded to Mr. Musk by questioning the safety of driverless cars, which are powered by A.I., according to a person who was in the room. She specifically noted the autopilot technology of Tesla, the electric carmaker, which Mr. Musk leads and which has been under scrutiny after the deaths of some drivers.

Mr. Musk didn’t respond, according to a person who was in the room.

I do not know whether these guys are lying to us or themselves (or both), but it does not really matter. It seems quite clear that, unless you are a capitalist, the utility of generative “AI” is limited at best (and downright negative in many cases) for the foreseeable future, and there is no reason to think that we are heading towards some sort of transcendental leap forward. Rather than being the birth of a digital deity and a new epoch, the current fervor around “AI” is perhaps best understood as merely more of the same. More junk search results and enshittification of the internet, more precarity and gigification of work, and more pollution and destruction of our biosphere to serve the whims of the profit motive.

Capitalism systemically fosters mystification of the labor and nature involved in production and is structurally biased towards increasingly new and complex high technology prioritized for its exchange value rather than use value. But newer and more complex is not necessarily better, and in fact is often demonstrably worse—perhaps best exemplified in how unsustainable and unjust industrial agriculture is prioritized over agroecology. Our society is not collectively better off with factory farming, “smart” toothbrushes, electric Hummers, or news articles poorly written by a computer program instead of a journalist.

The most important thing to understand about the current wave of “AI” speculation is that, beneath the hype and sheen, everything is a labor story and everything is an ecological story. Technology is not magic, it is a product of the material world and therefore has inputs and outputs like anything else. In a time of escalating climate and environmental crisis, who gets to decide whether we should be using copious resources—and all the consequent greenhouse gas emissions and ecological degradation—to train and run more large language models? And who is getting exploited for it? What, where, when, and how technology is researched and deployed should be determined democratically—by and for people—rather than by billionaires’ whims.

That is the real lesson of the Luddites, which we should take to heart for our collective dignity and wellbeing. Technology is never inevitable, and the future is unwritten. Personally, I would much rather it be written by the workers of the world than a corporate chatbot.